I’ve been honing my skills over the last few months with optimizing our e-commerce sites for search engines. Its been a long, arduous task. One that requires the utmost patience. When things go wrong, it seems like the world is ending. But, like one of my favorite books was famous for saying, Don’t Panic!

Over this period of time of getting up to speed on search engine optimization, I’ve been looking for a tool to help me track our rankings for keywords we’re going after. I had been using this huge Excel spreadsheet to track rankings on a bi-weekly basis, but it was a pain in the butt to update. I found some online tools that would let you track a few keywords, but nothing all that awesome. That, and I really wanted a desktop application for this. Don’t ask me why.

So, I was reading YOUMoz the other day and came across this post about the author’s troubles with MSN Live Search. She mentioned she used this tool to track keyword positioning, so I decided it was worth a look. I downloaded the 30 day trial version and installed it on my MacBook Pro.

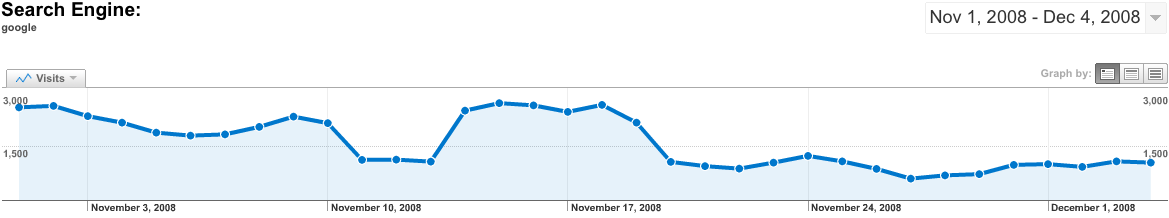

Setting it all up with keywords I was interested in for my site was a little time consuming, but I didn’t mind that. I gave it a run and I got a great breakdown of keywords we ranked well for and keywords we didn’t. This is cool as now I can see where I need to put some work in. But that wasn’t the coolest part. The following day, I ran it again. Now I could compare my results from today to the previous day. Kick. Ass. Even better, it stores your results for each day, so you could see how you did over the course of a month or a quarter or a year.

I find this to be a huge time saver because I don’t have to manage my rankings. All I have to do is add new keywords I want to track. When I want to update my positioning results, I just click a “Play” button and away the tool goes. I highly recommend this application to anyone tracking their SERPs in any of the major search engines.