I’ve had my 17″ MacBook Pro Core Duo for 2 years now and I don’t have a single bad thing to say about it. However, probably starting 6 months ago, the left fan started to make a lot of noise. It sounded like the bearings in the fan itself were going. What was worse was that even though the fan speed seemed to be set right compared to the right fan, the MacBook got really, really hot on the left side. I put up for it for quite a while, but I finally gave in and decided I needed to do something about it.

Generally I’m not sketched out by taking a computer apart, but my MacBook was a little different. I didn’t want to ruin the case or anything like that and I certainly didn’t want to fry and of the tiny components inside it. This computer is basically my life line to everything I do for development, both freelance and full time so I couldn’t afford to kill it as I don’t have a reliable backup for it (something I’m looking at resolving currently). So, even with the slight fear of messing up my MacBook, I trudged along.

I bought my new fans for $39.95 each plus S&H over at ifixit.com. What was even better is they have two pretty good articles on replacing the fans in my MacBook. The left fan instructions are here and the right fan instructions are here. I was able to follow the right fan instructions to a T. The left fan ones were a little different for me. First, I had to remove the Airport card. That wasn’t too difficult as its held down by a torx screw. Also, the left speaker was actually screwed down in my MacBook. It didn’t appear to be in the one used in the guide as the instructions said to just lift it up. I couldn’t do that and was glad I saw the screw and didn’t force anything (that’d have been bad!).

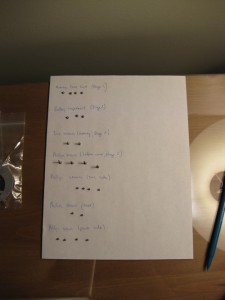

In all, it took about an hour to get the MacBook apart, the fans replaced, and the case put back together. I definitely took my time as I didn’t want to mess up and was very careful to not touch anything I didn’t have to inside the computer. I’ve included some interesting photos I took as I did my repair, which are below. The one thing that I did, that I highly recommend, is that as you take the screws out of the case and other parts of the MacBook, that you put them on a white piece of paper and label them. Or, use some plastic baggies and do the same. A lot of the screws look the same and you definitely want them to go back in the proper place.

If you have fan trouble in your MacBook, I definitely think anyone that has some technical abilities can do this repair themselves instead of paying Apple or another Apple repair shop to do it for them. I’d imagine you’d pay over $100 plus the cost of parts to have them do it for you.